Week Two: Fail-error

This course was prefaced with the promise of failure. Not in the professor-wants-you-to-fail-the-class sort of way, rather, a promise that we as students will experience numerous small failures, yet we must embrace them as a learning tool to grow as digital historians. With that in mind, I’m happy to report that I’ve definitely experienced failure this week! Only two of the five activities related to course work went on without a hitch, and the last of them still remains incomplete (for now…). From typos to error messages with seemingly impossible origins, I experienced it all this week, and through both my own googling and the help of others, I feel like I’m improved because of it.

Anaconda, RStudio, and the Impossible WAV

This week, the first thing on our to-do list was to install Anaconda, a python-based platform for data science computing. I’ve worked with Python many times both academically and professionally, but have only briefly dabbled with R in tutorials by The Programming Historian, so I was eager to get started and learn more! Anaconda was easy and quick to install via Homebrew, and after the quick command of brew cask install anaconda it seemed that everything was good to go, and the Anaconda Navigator was even installed in the right place! Yet when I went to verify the version installed, I was presented with the message conda: command not found. I was quick to notice that I had installed Anaconda for Python 3.7, and I was taken back to the first semester of my first year in university, in which my professor for introductory computer science professed his deep dislike for Python3. I had a suspicion and after checking what version of Python my laptop defaulted to, I found my suspicion to be correct. Being made to use Python2 in my first year of computer science, I manually set the default to remain at Python 2.7. Now, for the sake of applications installed that rely on Python, it is (or at least was in 2017) better to keep Python 2.7 as the system default, but different versions can be temporarily selected to use and managed via Homebrew installation and Anaconda environments. Rather than uninstalling the version of Anaconda I had, I reinstalled all available versions of Python, then set my working environment to run Python 3.7. All worked well! But I was still getting the message saying that the conda command did not exist! Since what seemed to be the obvious solution failed me, I turned to Google. The solution? When installing via Homebrew, Anaconda does not get a chance to ask the user to agree to its terms and conditions. Turns out all I needed to do was navigate to the directory of the Anaconda install and agree to the terms and conditions via command line prompts. After I did this, Anaconda was found and usable in the command line!

Following this, I was able to swiftly work my way through the activities using wget and APIs— the two activities I didn’t experience any struggles with. Wget is a very cool little program that I briefly had utilized before when I participated in a data science workshop at a conference I attended in January. The lesson was an excellent refresher, and much more interesting to me when applied to history rather than consumer statistics. In regards to APIs, I just finished a course on web development and applications in the Winter semester so I’m VERY familiar and comfortable with using APIs presently. The following lesson and activity about OCR is where things started to go awry. I had witnessed object character recognition in action before, but never thought of programming something to perform it myself. The R language looked readable enough from looking at the code provided, and so I went on to the step of opening RStudio in the Anaconda Navigator. I clicked, the icon swirled as the application loaded, it stopped, I waited… nothing happened. Odd. I searched RStudio in the file manager, and it appeared greyed out with a “no” symbol over the application icon. If I clicked it, I was presented with a pop-up stating that my computer cannot open application due to certain elements missing or not installing incorrectly. I figure this was yet another issue with my Anaconda install, so I wiped Anaconda from my system and installed it again, but this time using the graphical downloaded offered on the Anaconda website. Same error came up again for RStudio. Okay. Deleted Anaconda again and installed using the final other option, the command line download also available on the Anaconda website. When RStudio didn’t work once again, I removed it from the Anaconda Navigator and installed through the command line via conda install -c r rstudio. This install was also not successful, but I was given a better clue at what was going wrong with a warning the command line output: Unable to create environments file. Path not writable to environment location.. This was something I could google! Apparently, this a common issue with Anaconda Navigator not installing using the specified edition of Python or R, but if you created a new environment manually specifying the version of Python you want to use, the correct version of RStudio installed should be able to install and launch. I did this and it worked! Then I moved on to the actual programming and realized that no, actually, it didn’t really work. I was able to launch the latest version of RStudio, yes, but when installing packages, not a single one had the needed dependency and I was being prompted to install these dependency one-by-one through Homebrew. I was absolutely not about to spend hours doing that, so I gave up on Anaconda’s RStudio! The easiest solution was to just directly install RStudio, and after all that struggle I decided to accept my failure and go the easy route. After that the rest of the activity was fun, educational, and a breeze to do! The biggest challenge I faced was a small typo— missing closing brackets— that was quickly noticed and fixed following a good meal to restore my brain power.

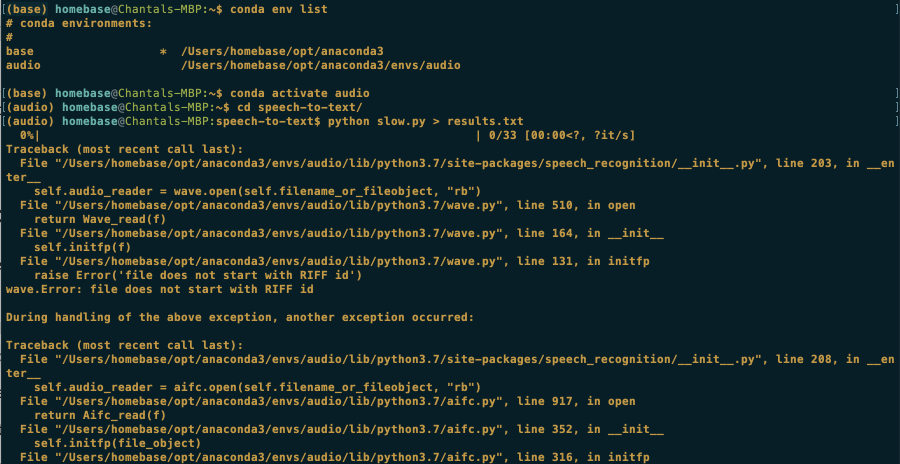

My final and unresolved error resulted from the bonus activity, learning to convert speech to text through the use of Google APIs. For the most part, the lesson was well done and easy to navigate. The tutorial on accessing the Google Cloud Console and getting the API key was out-of-date which led to some confusion, but after clicking through and fiddling with the current user interface, figured out what had been changed relatively easily. The next challenge I faced was using ffmpeg to separate the downloaded and converted WAV file into 30 second clips. This was a simple fix, the command ffmpeg -i source/mlk.wav -f segment -segment_time 30 -c copy parts/out%09d.wav was met with the error Could not write header for output file #0 (incorrect codec parameters ?): No such file or directory, and in looking at the cloned repository I was working in, there was not folder titled “parts” that the clips could be placed in like the command specifies. I made the folder and ran the command again, and now there was success. I played each of the clips briefly, and all were correctly divided and ready for transcription! I entered the command which would send this audio to the Google API for processing, a loading bar appeared, but then an extensive error appeared (part of it shown as the header photo in this week’s post) that began with wave.Error: file does not start with RIFF id and finalized ValueError: Audio file could not be read as PCM WAV, AIFF/AIFF-C, or Native FLAC; check if file is corrupted or in another format. It was clear that my audio clips were not being seen as a WAV file, but then what were they? Did they convert incorrectly? I confirmed via the command file [filename] that my clips WERE indeed the correct format, and in fact, the preferred format of WAV file that wave.py is able to identify and process. They were also definitely not corrupt because I was able to listen to all of them without issue. Googling my error gave me three results of significance, the first being that many have apparently encountered this issue but there aren’t many solutions. The other two results were potential solutions. One GitHub poster suggested it was an issue with Python 3.7, and if you created an environment the used any version before that, the issue would be fixed. I tried this, but, alas, still encountered the same set of errors. The second finding on this issue was within the comment section of the Kras tutorial we were linked to in this lesson, in which two of those who had followed the tutorial encountered my exact issue (one of those people being Dr. Graham from 2018)! Unfortunately, the solution given was too vague to follow exactly— the problem was conflicting packages in the user’s Python install, and they solved this by creating a new Python3 environment and reinstalling ffmpeg. I did both of these things but nothing has changed. I am completely and totally flummoxed by this error when all seems to be correct— hence it being dubbed the Impossible WAV— and, sadly, have currently given up on solving it. I want to fix this and learn how to use OCR at some point because it’s a very useful skill to have, but I’m temporarily accepting failure so that I don’t go insane. That’s how a lot of my journey learning computer science has gone, honestly.

The Price of Producing

All of the learning experiences I went through this week, all of the struggles, and all of the failure, has really made me think about the cost of work produced by digital historians. As the saying goes, time is money! And the digitization of work, whether that be simply publishing an article in an open access journal, or creating and using a static site to publish interactive work, costs both literally and figuratively. The price of open access publishing is that the author is unlikely to be paid much if at all. A professional publishing a paper in an online, free journal is making a hopeful step towards open source academics, but at their own monetary expense due to the lack of financial compensation for the hours of work they put into researching and writing that paper. Digital historians, many of which create their own venues to host and publish their research may accrue many other costs outside of open access publication, paying for domains for websites where they can publish their work and present it in a more dynamic fashion, and beyond this monetary cost, the cost of their time is rarely paid for yet exponential. I recognize that I’m fortunate to be taking a class that is providing me with guidance and explanation of all these tools that can be applied to digital history, but digital history is a recent field, and those who are teaching me taught all of this content to themselves. On top of the research a historian traditionally performs is additional hours spent programming tools to analyze select documents or transcribing audio to form a new and more thorough perspective on their chosen topic. All this, mostly done with the support of peers online and without support from academia.

The primary tool for research in academics is the library, and in the present day, the university library’s web portal. At Carleton University, when you search for something via this portal, the results you get are usually either books or journal articles, but from paid-for sources such as JSTOR or Taylor & Francis Online. Any student or faculty can download this content free of charge, but as long as they are apart of the institution. Despite there being many, there is little support for open access materials, even if published by professionals and peer-reviewed. They rarely show up in the library’s web search. Yet there’s ethical conflict here, because while university libraries like MacOdrum are primarily supporting membership-based content, at least there’s a guarantee that those creating the content that we’re reading are getting paid for what they have published. Google finds open source publications more easily than this search portal tool that is pushed to be our first point of research in academia, but this tool does not give all the information that may be useful, and the resources found by it reference other materials dubbed as “academic” that might be behind yet another paywall I, as a student, am unable to climb.

This pretty much summarises why I’m pro-open source. The layers upon layers of paywalls are restricting knowledge from those who might need it, whether apart of the academia or not. While there is issue with paying for the work being published, there is also a greater issue of the privilege surrounding accessing verified, quality information.